November 27, 2024, by Xiaoxi Wang, Chief Editor of iAuto and C-Dimension Media

In the previous article “VLA Hype: Is ‘End-to-End’ Outdated?”, we were introduced to a new concept- the “world model.” According to current industry understanding, the ultimate destination of “end-to-end” is the world model.

Why? Because end-to-end alone is insufficient for autonomous driving. The “black box” nature of end-to-end raises the upper limit while lowering the lower limit, creating a “seesaw effect.” There are endless corner cases and unmanageable lines of code. As I mentioned in that article, “Seeking L4 with ‘End-to-End’ is Like Climbing a Tree to Catch Fish.”

So, how can these issues be resolved? Enter the world model.

01

HERE WE GO

A rough tally shows that over 10 automakers and autonomous driving companies have now proposed world models. These include Tesla, NVIDIA, NIO, XPeng, Li Auto, Huawei, DiDi, Jueying, Yuanrong Qixing (Yuanqi), and some internally advancing projects.

This raises the questions: What is a world model? How is it created? And how does it function?

Tracing its origins, “World Models” first emerged in the field of machine learning.

In 2018, the NeurIPS machine learning conference featured a paper titled “Recurrent World Models Facilitate Policy Evolution,” which compared world models to human mental models in cognitive science. It argued that mental models are integral to human cognition, reasoning, and decision-making. Among these, the most critical ability – counterfactual reasoning- is an innate human capability.

Additionally, Jeff Hawkins, founder of Palm and author of “A Thousand Brains,” introduced the highly significant concept of the “world model” in AI.

Fast forward to February 16, 2024, when OpenAI stunned the world with its text-to-video model Sora, capable of generating 60-second videos from text prompts. This became a concrete representation of the world model.

In AI, institutions like Fei-Fei Li’s World Labs and Google DeepMind have also unveiled world models. Yann LeCun’s Meta FAIR team even released a navigation world model that predicts the next second’s trajectory based on prior navigation data.

Today, the industry consensus is that once this technology matures, autonomous driving will truly take off.

Though the domestic auto industry is still grappling with transitioning from “two-stage” to “one-stage” end-to-end solutions, as Bosch Intelligent Driving China President Wu Yongqiao noted, the roadmap—gradually progressing from two-stage to one-stage end-to-end, ultimately applying world models—is becoming the industry standard. This is a path from vehicle to cloud.

Moreover, examining the trajectory of autonomous driving development reveals something fascinating.

As the saying goes, “The art lies beyond the poem”—in recent years, all technologies propelling autonomous driving forward originated not from autonomous driving itself, but from artificial intelligence. From BEV+Transformer and Occupancy Networks (OCC) to end-to-end and world models, it’s clear: “Autonomous driving is fundamentally an embodied manifestation of AI.”

The world model appears to open a new window- and a new world – for pioneers like Tesla.

In 2023, Tesla’s head of autonomous driving introduced the “Universal World Model” at CVPR. This model can generate “possible futures”—entirely new videos—from past video clips and action prompts.

Wayve also unveiled its GAIA-1 model in 2023, which uses video, text, and action inputs to produce realistic, minute-long videos depicting multiple plausible future scenarios for autonomous driving training and simulation.

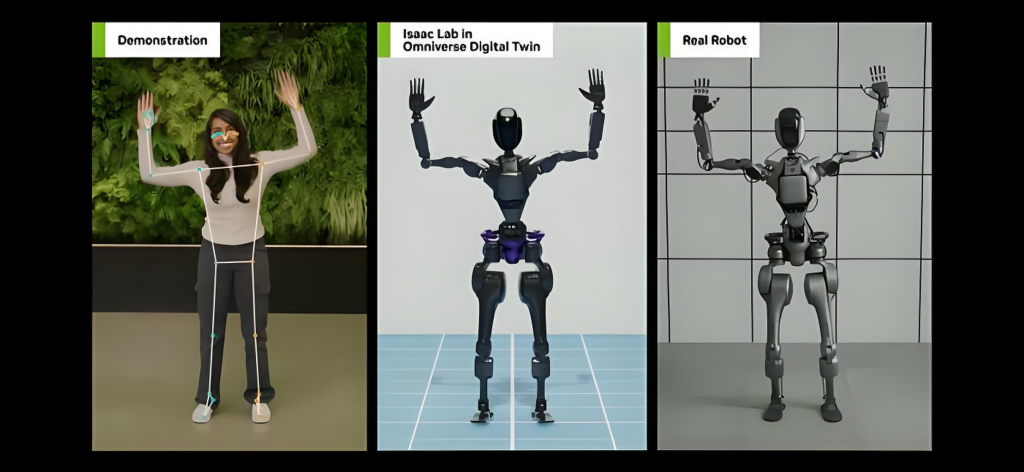

At the 2024 GTC conference, NVIDIA showcased advancements in world models with “The Next Wave of AI: Physical AI.”

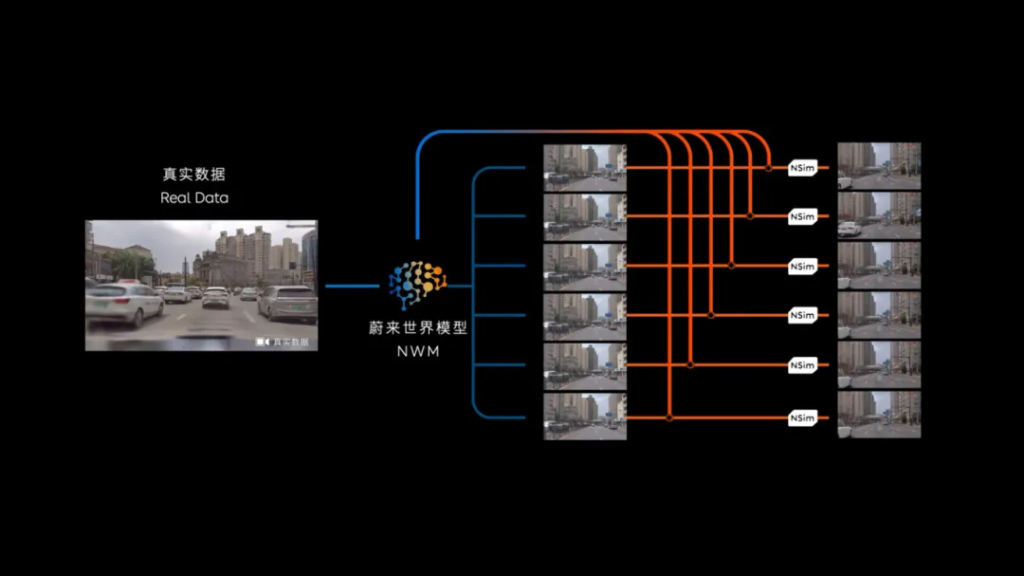

Among domestic automakers, NIO leads the pack. At NIO Day 2023, NIO announced its in-house world model R&D. A year later, at its Tech Day on July 27, 2024, NIO’s autonomous driving chief Ren Shaoqing released NWM (NIO World Model), China’s first intelligent driving world model, disclosing deeper technical details.

The NWM model is capable of full-scene data comprehension, long-sequence reasoning, and decision-making. It can predict 216 possible scenarios within 100 milliseconds and identify optimal decisions.

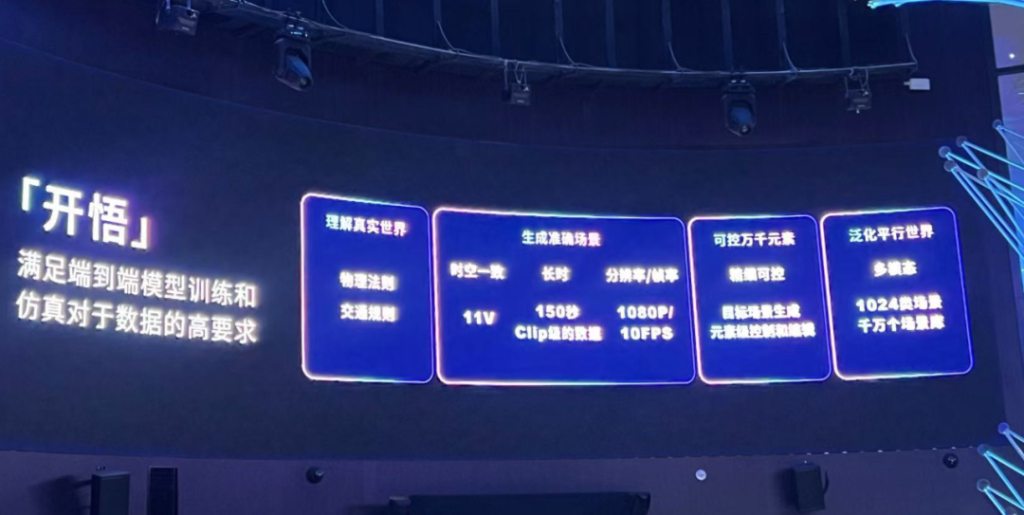

In late November, SenseTime Jueying hosted its inaugural AI DAY, revealing its “Kaiwu” world model, which generates simulated data and combines it with real-world vehicle data to reconstruct the physical world. SenseTime Jueying’s CTO Xiao Feng even boldly declared: “The ‘Big Four’ (DiDi, DeepRoute, Huawei, Momenta) is a thing of the past.”

Is the world model really this powerful?

02

HOW TO BUILD A WORLD MODEL?

Pony.ai CTO Lou Tiancheng places world models at the pinnacle of importance: “The world model is the most critical thing—bar none.”

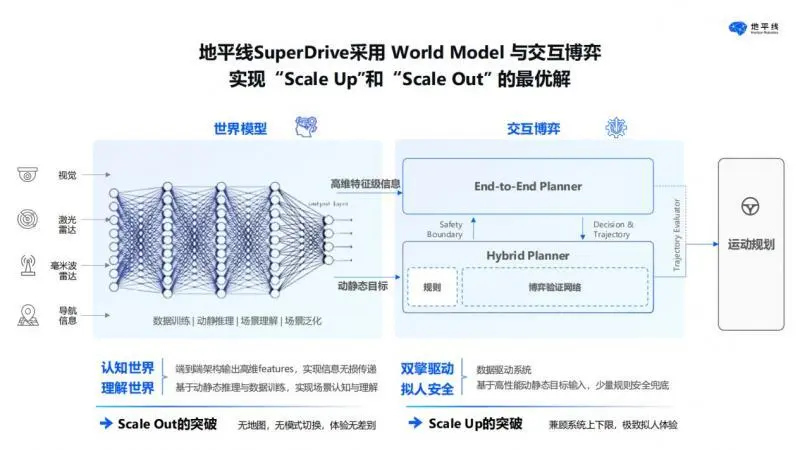

So, how does the world model function in autonomous driving? According to Horizon Robotics, it serves two purposes:

- Using generative AI to create predictive video data for diversified corner case training;

- Applying reinforcement learning to comprehend complex driving environments and output driving decisions from videos.

There are also two methods to construct world models:

- Pure imagination (“creating from nothing”);

- Enhancing existing information (e.g., using text, images, or videos as input to generate richer output).

As the ultimate weapon for autonomous driving, world models solve two major pain points:

- The high cost and inefficiency of 3D reconstruction;

- Simulation’s inability to replicate real-world data.

In essence, world models encompass simulation while far surpassing it.

This raises a question: Should the world model dominate real-world data, or merely supplement it?

Lou Tiancheng argues that relying solely on real-world data can only make autonomous systems approach human-level performance. Only world model data can construct a more complex world, ultimately yielding systems that surpass humans. In other words, “Autonomous driving safety must exceed human levels to be meaningful.”

Therefore, systems trained on world model data superior to human driving behavior must outperform humans. Under this logic, the world model should supersede real-world data.

However, this makes it difficult to quantitatively assess world model quality. Current evaluations focus on four capabilities:

- Accuracy

- Diversity

- Controllability

- Generalization

Right now, there’s no standard solution- every player is improvising.

For example, Horizon Robotics highlights two long-term values of world models:

- More accurate world understanding, reducing code volume, latency, network load, and error rates in autonomous systems.

- Generalization—world models form a universal understanding of complex driving environments rather than relying on repetitive input dependencies.

Horizon’s “interactive gaming” approach centers on data-driven simulated and reinforcement learning. To prevent machines from merely mimicking data, they must learn active comprehension. Here, the world model acts as a “system coach,” guiding the system on how to drive.

Meanwhile, SenseTime Jueying’s Kaiwu world model leverages 20 EFLOPS of cloud computing power to generate:

- Videos up to 150 seconds long

- 1080P resolution

- 11-camera perspectives

By combining real-world vehicle data and world model generation, it produces corner case data. SenseTime acknowledges the challenge: most industry solutions generate 1- or 6-camera videos, but Kaiwu tackles 11-camera views—a leap requiring spatiotemporal consistency and correcting fisheye distortions.

In contrast, Tesla and DeepRoute.ai use a single system for two business models (ADAS and Robotaxi), continuously enhancing system capabilities via data training. Here, the world model acts as a complement to real-world data.

As Circular Intelligence notes, the industry consensus is that achieving autonomous driving requires systems not just to match, but exceed human performance. Since real-world data can only approximate human driving, the world model stands as the only viable path to surpass it.

So, back to the original question: Do you agree that “building data” to create a “brave new world” is the “only solution”?

Contact the author: 110411530@qq.com

![[POLICY WATCH] CHINA’S 2025 CAR SCRAPPAGE SCHEME: HOW NEVS ARE RESHAPING THE MARKET](http://www.zingevs.com/wp-content/uploads/2025/07/388ec94d-e2cc-4894-97e0-48762c7e4d1d-1024x615.jpg)